In this example, I will log to Loki using Fluent-Bit on k3s distribution of Kubernetes on my Raspberry Pi Cluster.

I am referencing the documentation from fluent-bit to get the sources.

I have a Loki instance running on 192.168.0.20 which is listening on port 3100 and will be assigning it to the FLUENT_LOKI_URL environment variable.

Create the namespace:

$ kubectl create namespace logging

Create the service account and role:

$ kubectl create -f https://raw.githubusercontent.com/fluent/fluent-bit-kubernetes-logging/master/fluent-bit-service-account.yaml

$ kubectl create -f https://raw.githubusercontent.com/fluent/fluent-bit-kubernetes-logging/master/fluent-bit-role.yaml

$ kubectl create -f https://raw.githubusercontent.com/fluent/fluent-bit-kubernetes-logging/master/fluent-bit-role-binding.yaml

Fluent-bit will be setup as a Daemon set so it will run on every node, and the config will be stored as a config map.

The original config will be available at fluent-bit-configmap.yaml but since we are not logging to Elasticsearch, but to Loki, we will edit the config:

$ wget https://raw.githubusercontent.com/fluent/fluent-bit-kubernetes-logging/master/output/elasticsearch/fluent-bit-configmap.yaml

And then add the loki output config:

output-loki.conf: |

[OUTPUT]

Name loki

Match *

Url ${FLUENT_LOKI_URL}

BatchWait 1s

BatchSize 30720

Labels {job="fluentbit", stack="pistack"}

AutoKubernetesLabels true

and also adjust the config to include the file that we are introducing:

...

@INCLUDE output-loki.conf

...

So my complete config map will look like this:

apiVersion: v1

kind: ConfigMap

metadata:

name: fluent-bit-config

namespace: logging

labels:

k8s-app: fluent-bit

data:

# Configuration files: server, input, filters and output

# ======================================================

fluent-bit.conf: |

[SERVICE]

Flush 1

Log_Level info

Daemon off

Parsers_File parsers.conf

HTTP_Server On

HTTP_Listen 0.0.0.0

HTTP_Port 2020

@INCLUDE input-kubernetes.conf

@INCLUDE filter-kubernetes.conf

@INCLUDE output-loki.conf

input-kubernetes.conf: |

[INPUT]

Name tail

Tag kube.*

Path /var/log/containers/*.log

Parser docker

DB /var/log/flb_kube.db

Mem_Buf_Limit 5MB

Skip_Long_Lines On

Refresh_Interval 10

filter-kubernetes.conf: |

[FILTER]

Name kubernetes

Match kube.*

Kube_URL https://kubernetes.default.svc:443

Kube_CA_File /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

Kube_Token_File /var/run/secrets/kubernetes.io/serviceaccount/token

Kube_Tag_Prefix kube.var.log.containers.

Merge_Log On

Merge_Log_Key log_processed

K8S-Logging.Parser On

K8S-Logging.Exclude Off

output-elasticsearch.conf: |

[OUTPUT]

Name es

Match *

Host ${FLUENT_ELASTICSEARCH_HOST}

Port ${FLUENT_ELASTICSEARCH_PORT}

Index fluent-pistack-k3s

Logstash_Format On

Replace_Dots On

Retry_Limit False

HTTP_User user

HTTP_Passwd pass

tls On

output-stdout.conf: |

[OUTPUT]

Name stdout

Match *

output-loki.conf: |

[OUTPUT]

Name loki

Match *

Url ${FLUENT_LOKI_URL}

BatchWait 1s

BatchSize 30720

Labels {job="fluentbit", stack="pistack"}

AutoKubernetesLabels true

parsers.conf: |

[PARSER]

Name apache

Format regex

Regex ^(?<host>[^ ]*) [^ ]* (?<user>[^ ]*) \[(?<time>[^\]]*)\] "(?<method>\S+)(?: +(?<path>[^\"]*?)(?: +\S*)?)?" (?<code>[^ ]*) (?<size>[^ ]*)(?: "(?<referer>[^\"]*)" "(?<agent>[^\"]*)")?$

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name apache2

Format regex

Regex ^(?<host>[^ ]*) [^ ]* (?<user>[^ ]*) \[(?<time>[^\]]*)\] "(?<method>\S+)(?: +(?<path>[^ ]*) +\S*)?" (?<code>[^ ]*) (?<size>[^ ]*)(?: "(?<referer>[^\"]*)" "(?<agent>[^\"]*)")?$

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name apache_error

Format regex

Regex ^\[[^ ]* (?<time>[^\]]*)\] \[(?<level>[^\]]*)\](?: \[pid (?<pid>[^\]]*)\])?( \[client (?<client>[^\]]*)\])? (?<message>.*)$

[PARSER]

Name nginx

Format regex

Regex ^(?<remote>[^ ]*) (?<host>[^ ]*) (?<user>[^ ]*) \[(?<time>[^\]]*)\] "(?<method>\S+)(?: +(?<path>[^\"]*?)(?: +\S*)?)?" (?<code>[^ ]*) (?<size>[^ ]*)(?: "(?<referer>[^\"]*)" "(?<agent>[^\"]*)")?$

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name json

Format json

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name docker

Format json

Time_Key time

Time_Format %Y-%m-%dT%H:%M:%S.%L

Time_Keep On

[PARSER]

Name syslog

Format regex

Regex ^\<(?<pri>[0-9]+)\>(?<time>[^ ]* {1,2}[^ ]* [^ ]*) (?<host>[^ ]*) (?<ident>[a-zA-Z0-9_\/\.\-]*)(?:\[(?<pid>[0-9]+)\])?(?:[^\:]*\:)? *(?<message>.*)$

Time_Key time

Time_Format %b %d %H:%M:%S

Create the config map:

$ kubectl apply -f fluent-bit-configmap.yaml

The Daemon set deployment manifest was retrieved from fluent-bit-ds.yaml, but the image used is not based for ARM, so in this post I demonstrated how to build images for ARM.

I have included that image in the manifest below and also informed the environment section about my loki url in FLUENT_LOKI_URL:

$ cat

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluent-bit

namespace: logging

labels:

k8s-app: fluent-bit-logging

version: v1

kubernetes.io/cluster-service: "true"

spec:

selector:

matchLabels:

k8s-app: fluent-bit-logging

template:

metadata:

labels:

k8s-app: fluent-bit-logging

version: v1

kubernetes.io/cluster-service: "true"

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "2020"

prometheus.io/path: /api/v1/metrics/prometheus

spec:

containers:

- name: fluent-bit

image: pistacks/fluent-bit-plugin-loki:armv7-latest

#image: fluent/fluent-bit:arm32v7-1.6.1

imagePullPolicy: Always

ports:

- containerPort: 2020

env:

- name: FLUENT_LOKI_URL

value: "http://192.168.0.20:3100/loki/api/v1/push"

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

- name: fluent-bit-config

mountPath: /fluent-bit/etc/

terminationGracePeriodSeconds: 10

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: fluent-bit-config

configMap:

name: fluent-bit-config

serviceAccountName: fluent-bit

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

- operator: "Exists"

effect: "NoExecute"

- operator: "Exists"

effect: "NoSchedule"

Go ahead and create the deployment:

$ kubectl apply -f fluent-bit-ds.yaml

Once all the pods has checked in, we can verify:

$ kubectl get pods -n logging -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

fluent-bit-sg62c 1/1 Running 0 8h 10.42.4.24 rpi-03 <none> <none>

fluent-bit-s4gx4 1/1 Running 0 8h 10.42.2.92 rpi-06 <none> <none>

fluent-bit-8p76b 1/1 Running 0 8h 10.42.1.82 rpi-07 <none> <none>

fluent-bit-fnvmd 1/1 Running 0 8h 10.42.3.23 rpi-02 <none> <none>

fluent-bit-zsp82 1/1 Running 0 8h 10.42.0.77 rpi-05 <none> <none>

fluent-bit-89xl5 1/1 Running 0 8h 10.42.5.23 rpi-01 <none> <none>

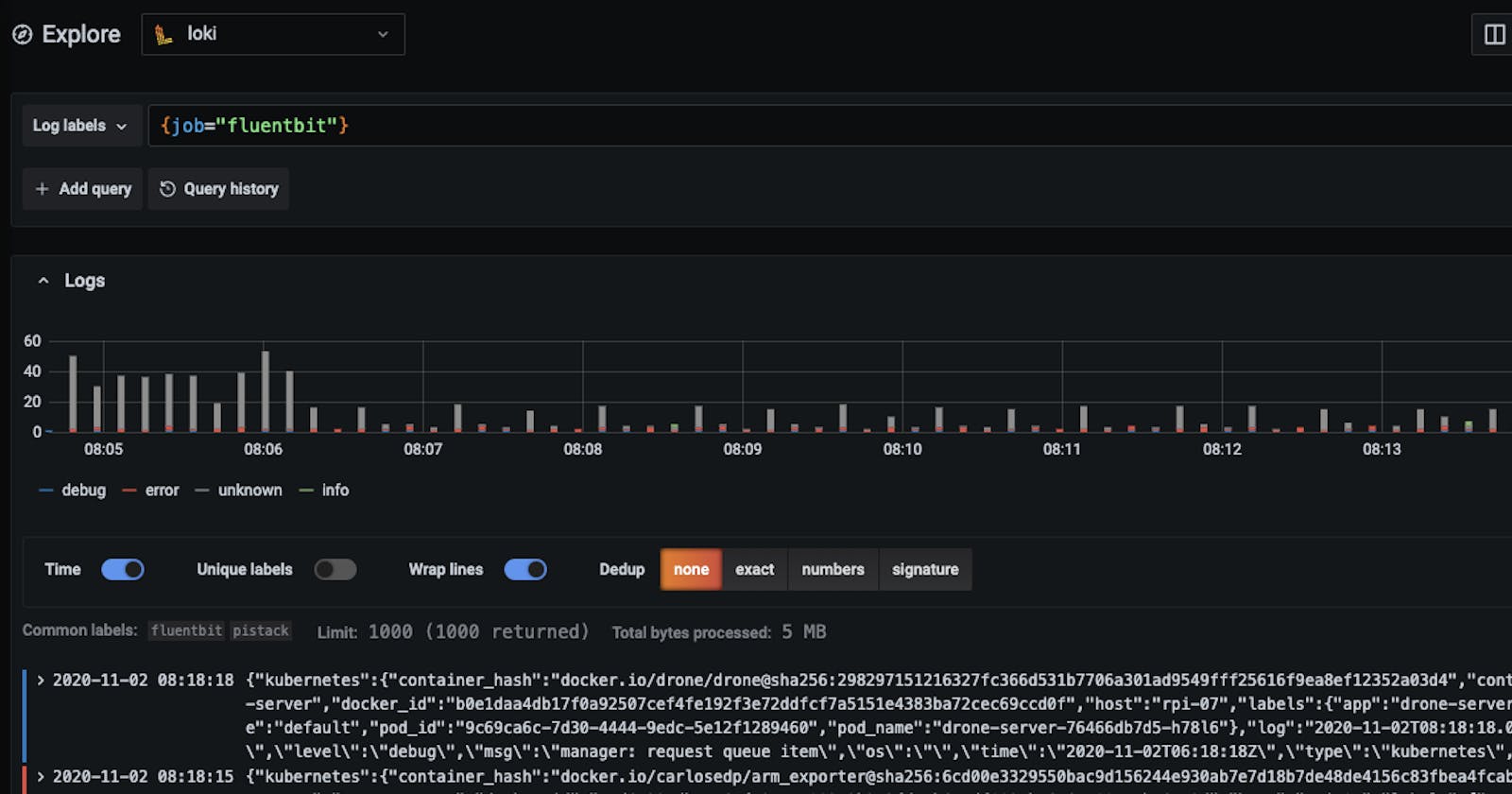

Head over to Grafana and check if we can see logs under the job fluentbit:

Let's test it out by running a pod that just pings:

$ kubectl run --rm -it --restart=Never debug --image=busybox -- ping 8.8.8.8

Now let's look for logs with the following labels:

{job="fluentbit", container_name="debug"}

Resources: